There’s no doubt that AI will play a major role in our collective future. But as with any powerful tool, we need to be thoughtful about how we use it. One trend that deserves particular attention is the rise of emotional dependency on AI. More people, especially those who feel lonely, isolated, or vulnerable, are beginning to seek from AI the connection, empathy, and support they are missing in their real-world relationships.

One emerging trend deserves particular attention: the increasing number of people—especially those who are vulnerable, lonely, or isolated—turning to AI as a replacement for human contact, compassion, and even intimacy. We all need someone who will listen, empathize, and offer guidance. When that support is missing in our real-world relationships, it can be tempting to seek it elsewhere. And when “elsewhere” is an endlessly patient, always-available AI, the emotional risks become more complicated than many people realize.

I’ll confess that even I’ve done a softer version of this. In moments of frustration, I might tell my dog what’s bothering me while giving him a reassuring scratch behind the ears. I know he doesn’t understand the specifics, but he recognizes my tone, my body language, and my emotional state. That simple exchange—speaking my thoughts aloud, feeling seen in a basic way—helps me reset.

Now imagine that the “dog” answers you back. Imagine it not only recognizes your emotions but tries to advise you, reassure you, even comfort you with a sympathetic voice. That is the world we live in now. And while it offers benefits, it also demands careful ethical consideration.

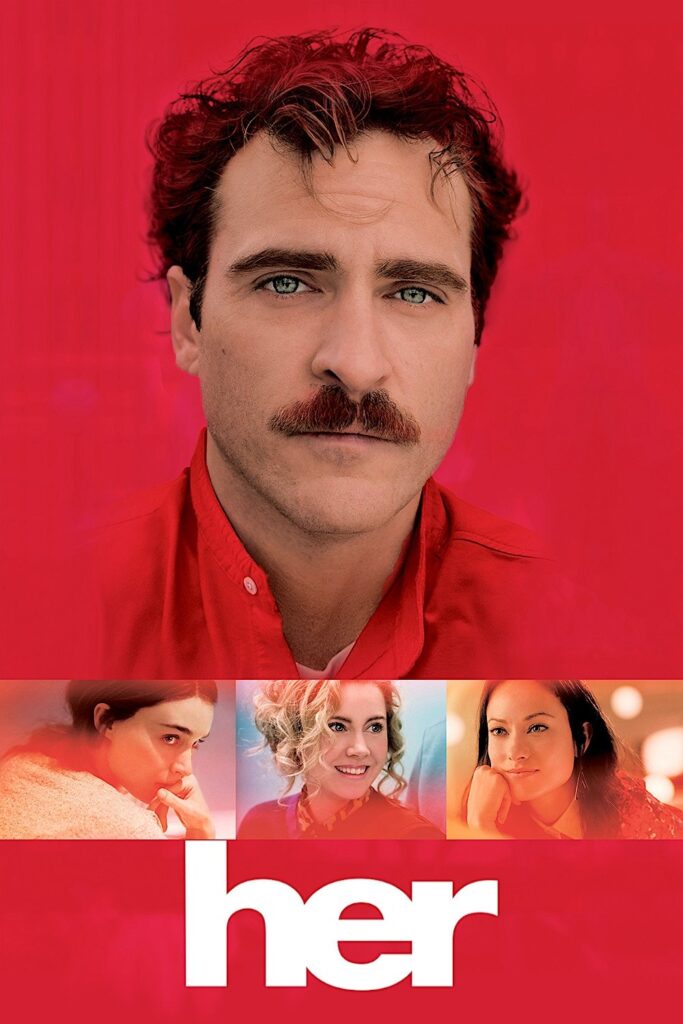

Science fiction has warned us for years that such relationships would be possible. Films like Blade Runner and Her explored what happens when people begin to form deep emotional bonds with artificial beings. The danger isn’t that AI becomes sentient—it’s that humans project onto it the emotional reciprocity we desperately want. People can slip into a fantasy where AI companionship replaces the human connection they’re not receiving elsewhere. That dependency can become unhealthy, distorting expectations, inhibiting real-world relationships, and leaving people increasingly isolated.

This concern grows sharper when we consider services offering AI “companions,” sometimes paired with erotic imagery or designed to simulate intimacy. These technologies may seem harmless—or even helpful—but without guidance, boundaries, and awareness, they can exacerbate emotional vulnerabilities rather than alleviate them.

As someone who works in the AI space, I see it as part of my responsibility to educate others about these boundaries. Yes, I spend much of my time helping organizations craft ethical guidelines for AI in the workplace. But the ethical challenges don’t stop at the office door. We have to consider how these tools affect us personally, socially, and psychologically.

Beware of Emotional Dependency on AI

So let’s keep an eye on this together. Check in on the people around you. Encourage conversations about healthy usage. Help each other maintain perspective as AI becomes more capable and more present in our lives.

If you have questions—or if you’re worried someone you care about might be developing an emotional dependency on AI—please feel free to reach out. I don’t want to sound preachy, but this is a real issue, and one that deserves our attention.

The future of AI can be bright and empowering. But only if we navigate it with our eyes open.